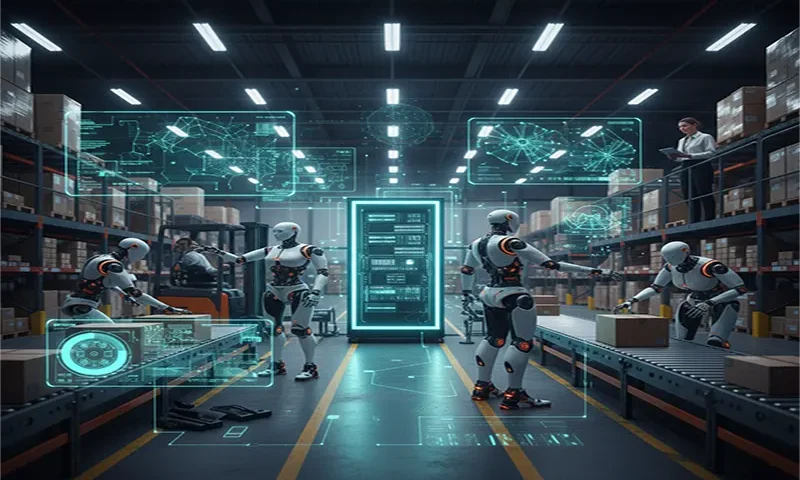

The logistics industry is currently standing at the precipice of a paradigm shift. For decades, “automation” meant fixed conveyor belts and Automated Guided Vehicles (AGVs) following magnetic strips. Today, we are moving toward Autonomy, powered by Embodied AI—the intelligence that allows a machine to perceive, reason, and physically interact with its environment in a human-like way.

1. From Automation to Autonomy: The Humanoid Necessity

Traditional warehouse robots are specialized. A robotic arm picks; a wheeled base moves. However, the modern supply chain is messy and “unstructured.” Boxes fall, aisles get cluttered, and loading docks are rarely uniform.

Humanoid robots represent the ultimate “general-purpose” tool. Because our world is built by humans for humans, a humanoid form factor allows a robot to navigate stairs, reach high shelves, and operate manual equipment without requiring us to redesign the entire warehouse. Embodied AI is the “brain” that turns this mechanical frame into a functional worker.

2. Core Training Paradigms: How Robots Learn

Training a humanoid to walk is difficult; training it to carry a 20kg box across a slippery floor while avoiding a forklift is an immense computational challenge. We use three primary methods:

- Reinforcement Learning (RL): The robot learns through trial and error. In a virtual environment, the AI is “rewarded” for successful movements (e.g., staying upright) and “penalized” for failures (e.g., dropping a package).

- Imitation Learning: AI models observe human experts via motion-capture suits or video data. By watching how a human pivots their hips to lift a heavy load, the model learns the “policy” of movement.

- Sim-to-Real (Simulation to Reality): It is too dangerous and slow to train a robot in a real warehouse. Instead, we use “Digital Twins.”

The Reality Gap: The biggest challenge in Sim-to-Real is “Domain Randomization.” Developers must artificially add noise, friction changes, and lighting shifts in the simulation so the robot doesn’t get confused when it encounters the slight imperfections of the real world.

3. The Multimodal Brain: Beyond Sight

For a humanoid to be multi-functional, it cannot rely on vision alone. Embodied AI models are multimodal, integrating:

- Computer Vision: Identifying box labels and depth perception.

- Tactile Sensing: “Feeling” the grip strength needed so the robot doesn’t crush a fragile parcel.

- Proprioception: The internal sense of where its limbs are in space.

Modern models, often called Vision-Language-Action (VLA) models, allow a robot to take a verbal command—“Find the damaged pallet and move it to the inspection zone”—and translate that language into a sequence of physical motor commands.

4. The Hardware-Software Loop

In logistics, the hardware must complement the software. A high-intelligence AI is useless if the robot’s “hands” (end-effectors) lack the degrees of freedom to grasp a doorknob.

| Component | Function in Logistics | AI Requirement |

| Bipedal Legs | Navigating stairs/thresholds | Dynamic balance algorithms |

| Dexterous Hands | Picking small or irregular items | High-fidelity tactile feedback |

| Torso Actuators | Lifting and twisting with loads | Torque-control neural nets |

This loop ensures that the robot isn’t just a computer on wheels, but a physical entity that understands physics, gravity, and momentum.

5. Challenges and the Path Forward

Despite the hype, we face significant hurdles:

- Data Scarcity: Unlike text-based AI (like ChatGPT), there isn’t a “limitless” internet of physical movement data.

- Latency: In a fast-moving warehouse, a half-second delay in processing can lead to a collision.

- Battery Density: Humanoids require immense power to balance and lift simultaneously; most current models only operate for 2–4 hours.

Key Takeaways

- Embodied AI is the bridge between digital intelligence and physical labor.

- Sim-to-Real is the primary training ground, allowing for millions of hours of “practice” in seconds.

- Humanoid forms are chosen not for aesthetics, but for their ability to fit into existing human infrastructure.

- The Future lies in “General Purpose” robots that can switch from unloading trucks to sorting mail without a hardware swap.

As these models become more refined, the warehouse of 2030 will likely be a collaborative space where humanoids handle the “3Ds”—tasks that are Dull, Dirty, or Dangerous—while humans move into higher-level supervisory roles.